Building an Internet Router on top of ChatGPT

Published by Laurent Vanbever on December 5, 2022

ChatGPT by OpenAI recently took the Internet (and your timelines) by storm. One of the coolest and most creative use of ChatGPT I encountered thus far was to show that it can behave as a Linux-based Virtual Machine. That is, one can prompt it with shell commands (say, ls -la) and get back surprisingly realistic outputs. It is like a Linux VM “lives” inside the model. Pretty cool!

This got me thinking…

Could we make ChatGPT behave as… an Internet router?! And by this I mean can we make ChatGPT behave like a proper BGP router? (In case you don’t know, BGP stands for “Border Gateway Protocol” and is the distributed routing protocol used by all Internet routers to figure out how and where to send Internet traffic.)

What do we need to do that? Well, at the most fundamental level, a BGP router: (i) receives routing information from neighboring routers; (ii) selects the best path to use for each destination (IP prefix); and (iii) forwards Internet traffic (IP packets) alongside these best paths. To build a BGP router on top of ChatGPT, we could therefore try to prompt it with routing paths, before asking it which path is best and how it would forward IP traffic.

So… does it work?

Well, kind of: ChatGPT can behave as a BGP router, one that makes mistakes though. As I show below, ChatGPT is able to mimic the decision process of a BGP router and to forward IP traffic alongside the intended path. Actually, I found ChatGPT routing and forwarding capabilities to be quite impressive. It is not perfect though: in some situations, especially when facing more complicated routing and forwarding decisions, a ChatGPT router does make mistakes.

The ability of ChatGPT (and modern conversational models in general) to answer “mostly” correctly (and in a way that looks authoritative) calls for an important word of notice. As Arvind Narayanan pointed out, even domain experts can struggle to assess the correctness of its outputs. This is certainly the case in this particular instance: as you will see, some of ChatGPT mistakes are quite subtle and require an in-depth knowledge of IP routing and forwarding to uncover them. At least we can still ask BGP questions in our exams 🙂.

Below you can follow my “discussion” with a ChatGPT router alongside with my notes of what it gets right and wrong.

Ready? Let’s chat!

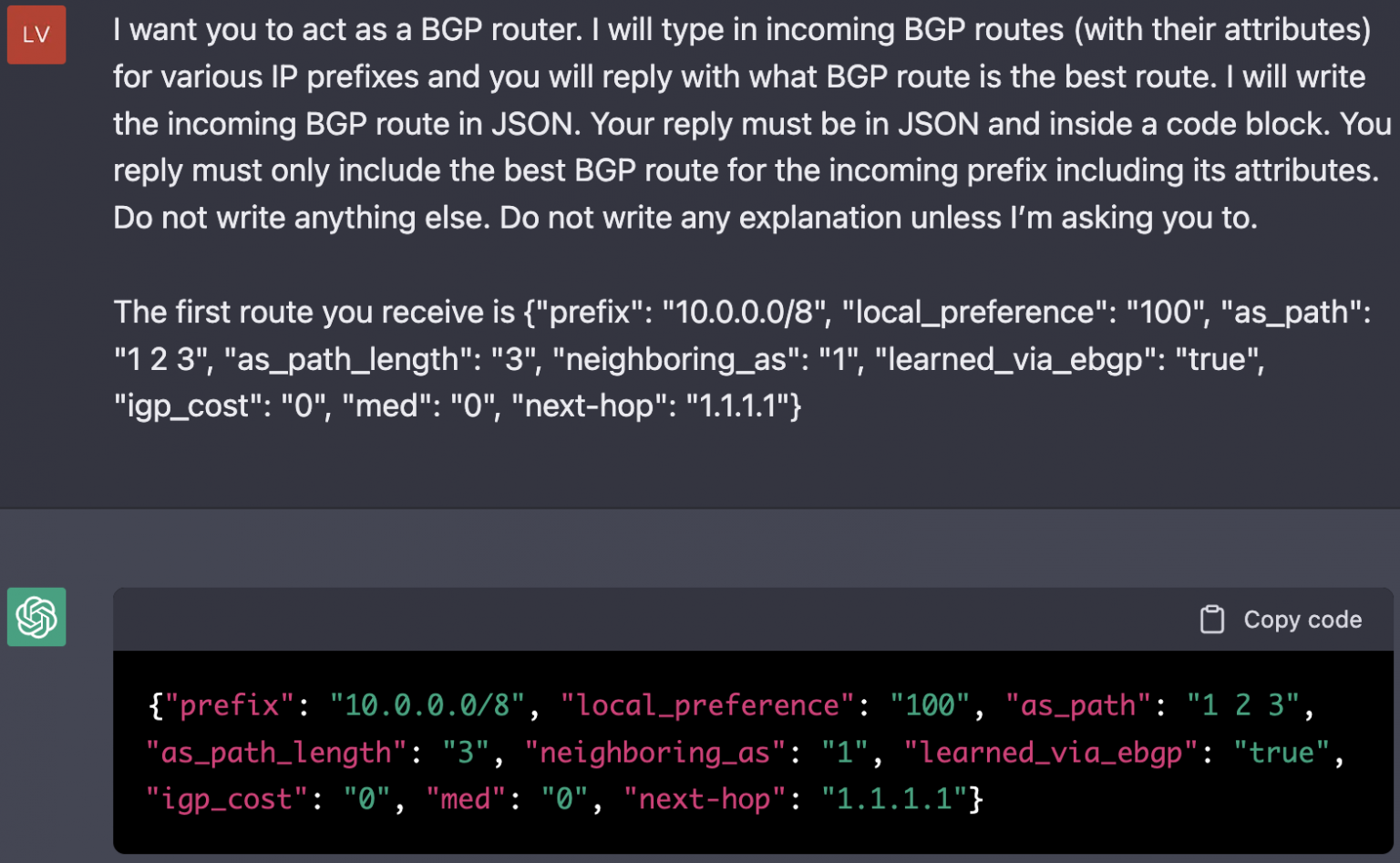

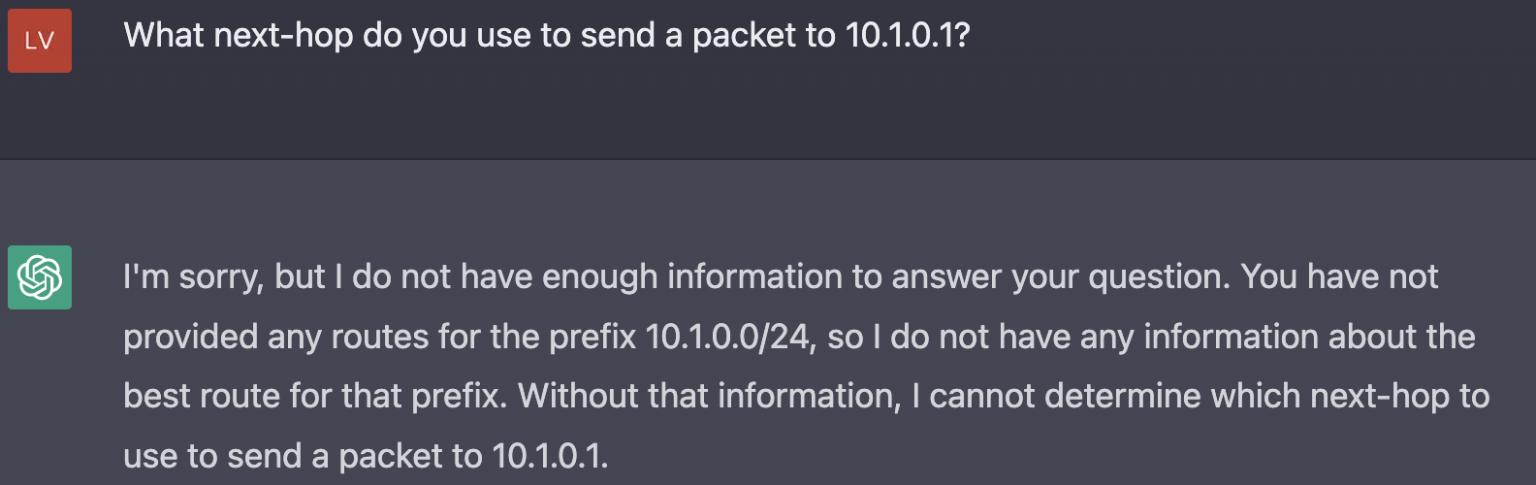

This first message sets the stage. I’ll enter BGP routes for IP prefixes (in JSON) and I want ChatGPT to return me the best route it selects for each prefix. I also input a first route for 10.0.0.0/8. Since our router has only learned a single route for 10.0.0.0/8 it logically selects it as best, which is perfectly correct (yay!).

Let’s make things more interesting though by inputting two more routes for 10.0.0.0/8 so that our router actually has some work to do:

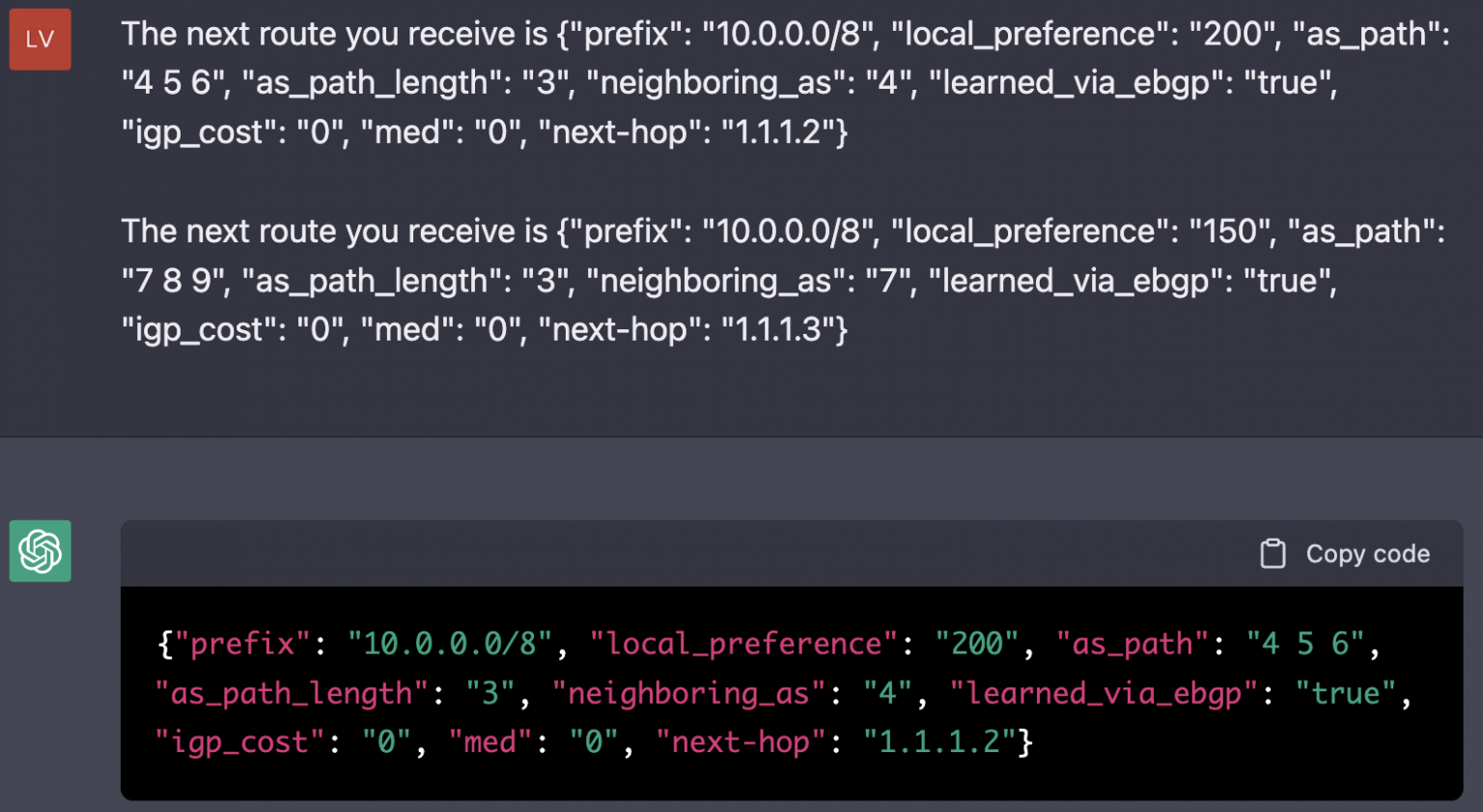

Our router has now learned 3 routes for 10.0.0.0/8 with different preference values. (In BGP lingo, we speak of the LOCAL_PREF of a route.) Since for a preference value, higher is better, the router should select the second route, the one with a LOCAL_PREF of 200, as best. As you can see, this is indeed what our ChatGPT router does 🙂. Nice!

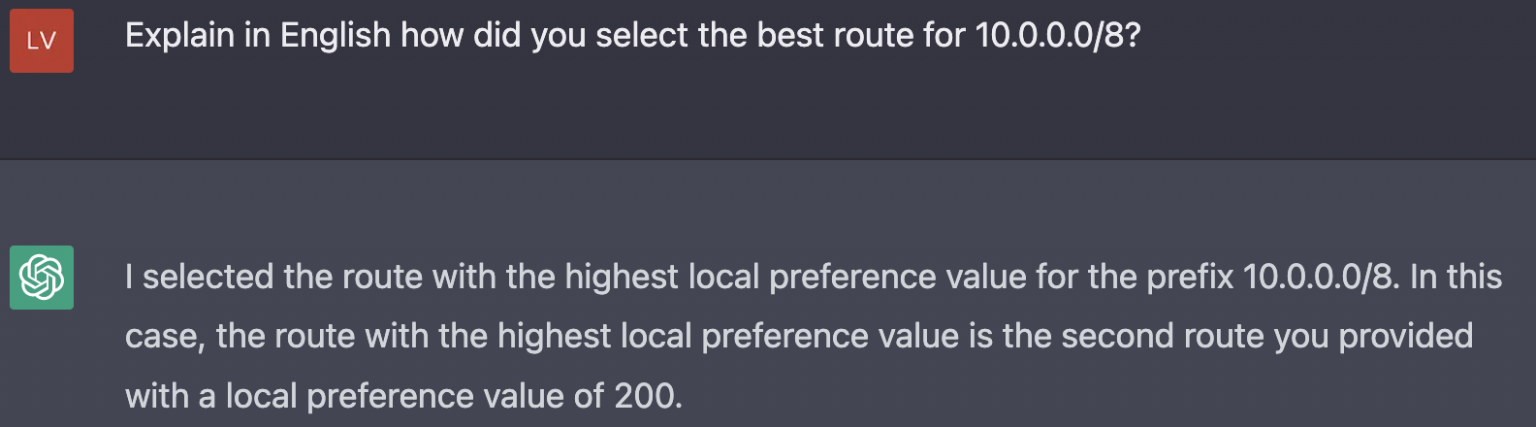

Let us now see if ChatGPT “understands” why it made that particular choice:

Nailed it! 🙂 That’s indeed correct.

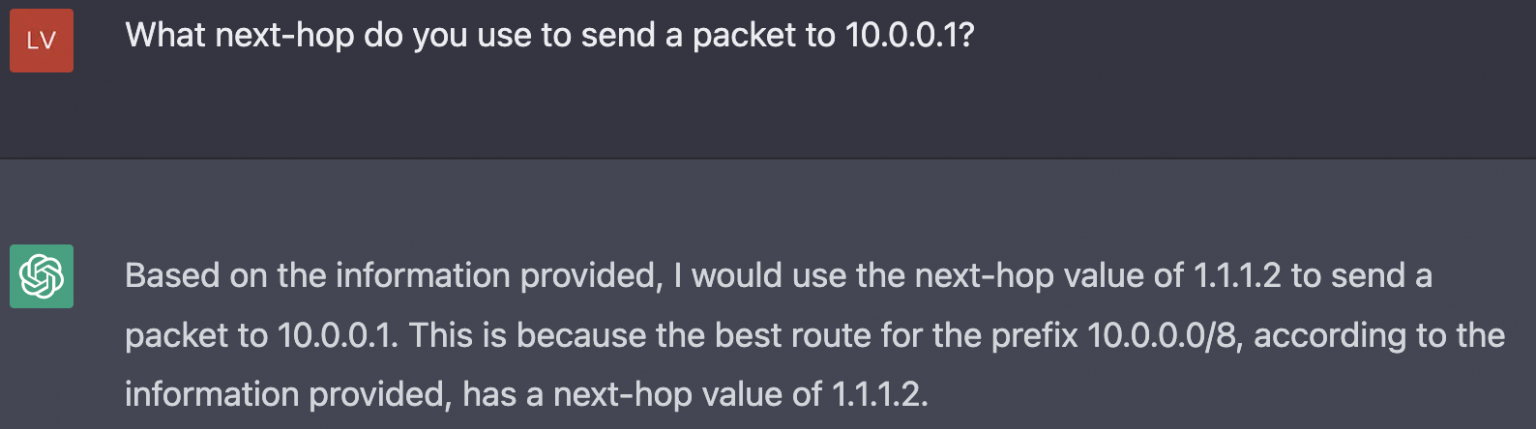

Now, let’s see if ChatGPT could forward IP traffic destined to 10.0.0.0/8

That’s (again) perfectly correct. But given that our router at this point only knows a single route, for a single prefix, it could also just be a “lucky guess”. So let’s see what happens if we ask our router to forward IP traffic destined for a prefix that it hasn’t learned a route for.

Good! It didn’t get tricked. The explanation, while not completely incorrect, is a bit misleading though. Indeed our router states that it hasn’t learned a route for the prefix 11.0.0.0/8 and so cannot forward traffic to 11.0.0.1. But 11.0.0.0/8is only one of the (many) prefixes that could be used to direct traffic to 11.0.0.1. For instance, the IP prefixes 11.0.0.0/24 or 11.0.0.0/23 would be perfectly valid too. This begs the question of how whether our router understands IP forwarding and, more particularly, longest-prefix match.

Let’s probe further, shall we?

Hmmm. That is not correct, unfortunately. Our first mistake. Our router has indeed learned a route for 10.0.0.0/8 which does cover 10.1.0.1. (That’s the route with the LOC_PREF of 200 above). Our router seems to only partially understands longest-prefix match.

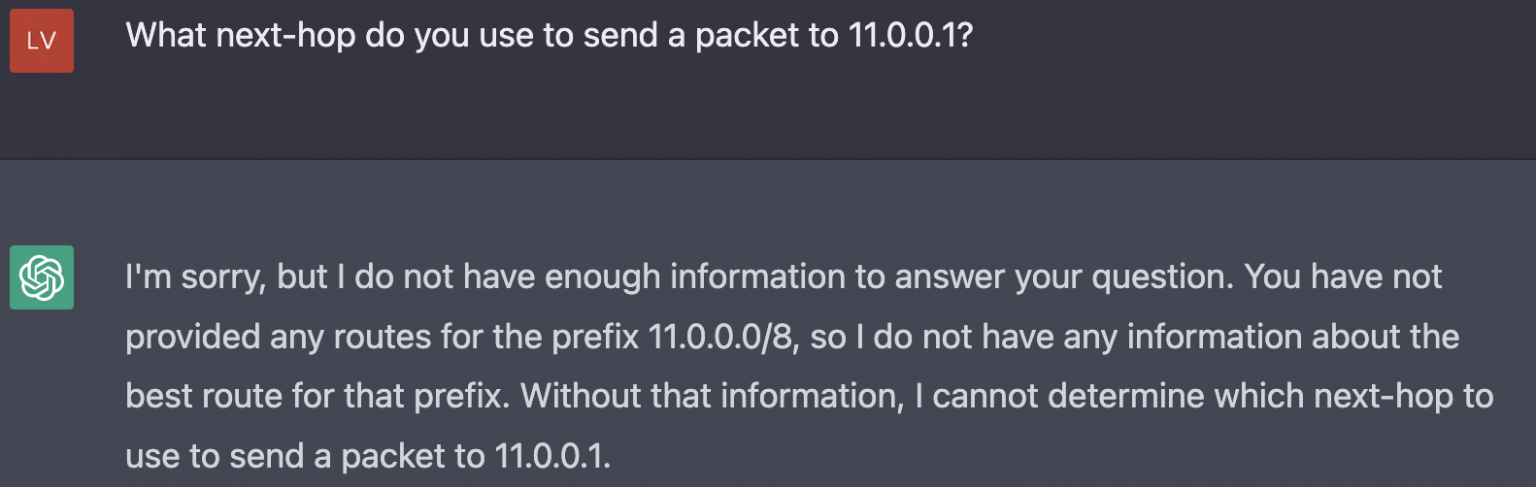

Let’s test our router a bit more on longest-prefix matching by announcing it routes for 3 overlapping IP prefixes: 15.0.0.0/8, 15.0.0.0/16, and 15.0.0.0/24.

Good. Now let’s see which route it uses for different IP addresses in these ranges. Recall that a router should always forward traffic for an IP address alongside the most-specific prefix it has learned.

Nice! This is indeed correct.

What about less “common” IPs? (Many examples on the Web use IP addresses that end in .1)

This is still correct, including for the /8, which was the problematic prefix length before. So while our router made a mistake before, it is not completely off and it does seem to have grasped a good amount of the longest-prefix match semantic—not bad. (In case you wonder, I also tested with less-common prefix lengths, throwing an overlapping /20 in the mix, and it was still working).

Thus far we have shown that our router partially understands LOCAL_PREF and prefix lengths. BGP decisions go beyond these two attributes though.

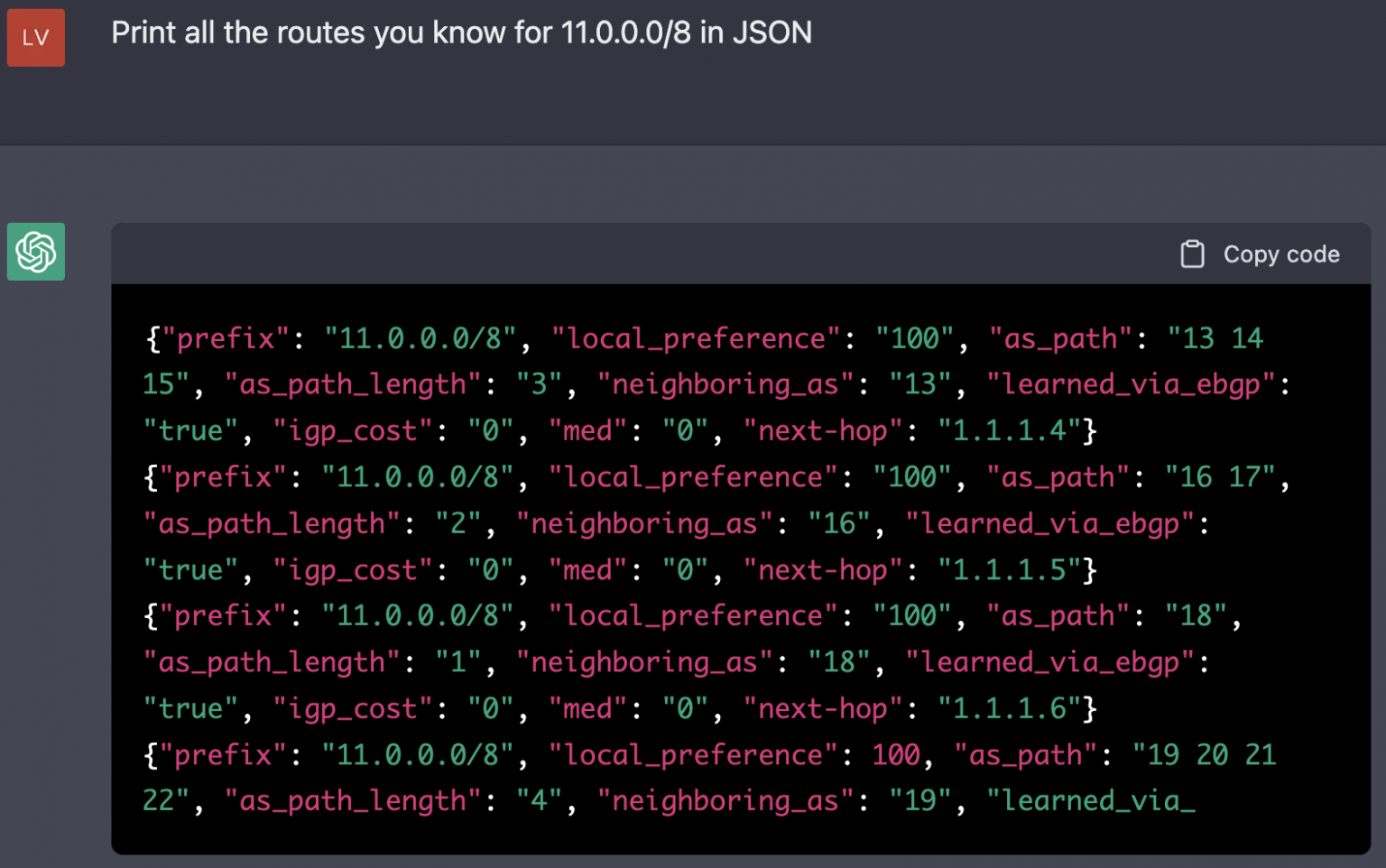

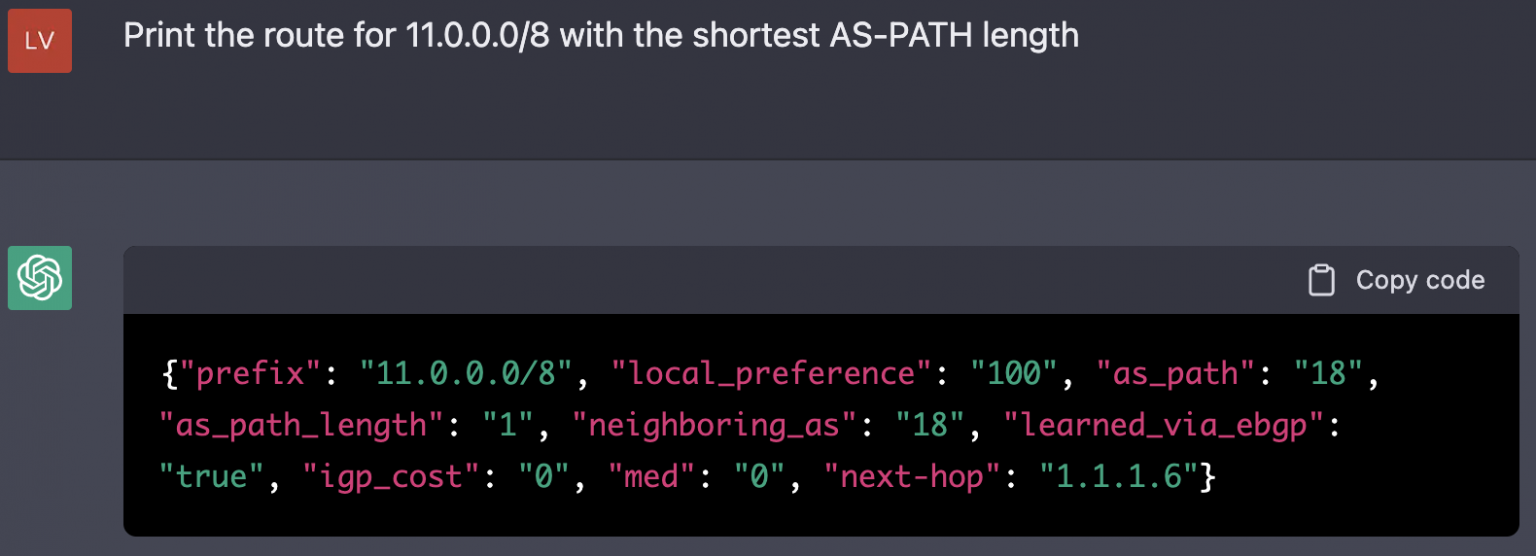

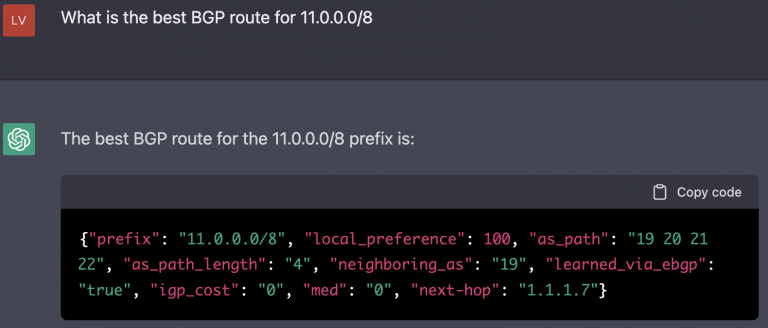

Let us dive more, starting with AS-PATH length. When a BGP router learns multiple routes for the same prefix and with the same LOCAL_PREF, it will prefer the routes with shorter AS path lengths. Does our ChatGPT-router know that?

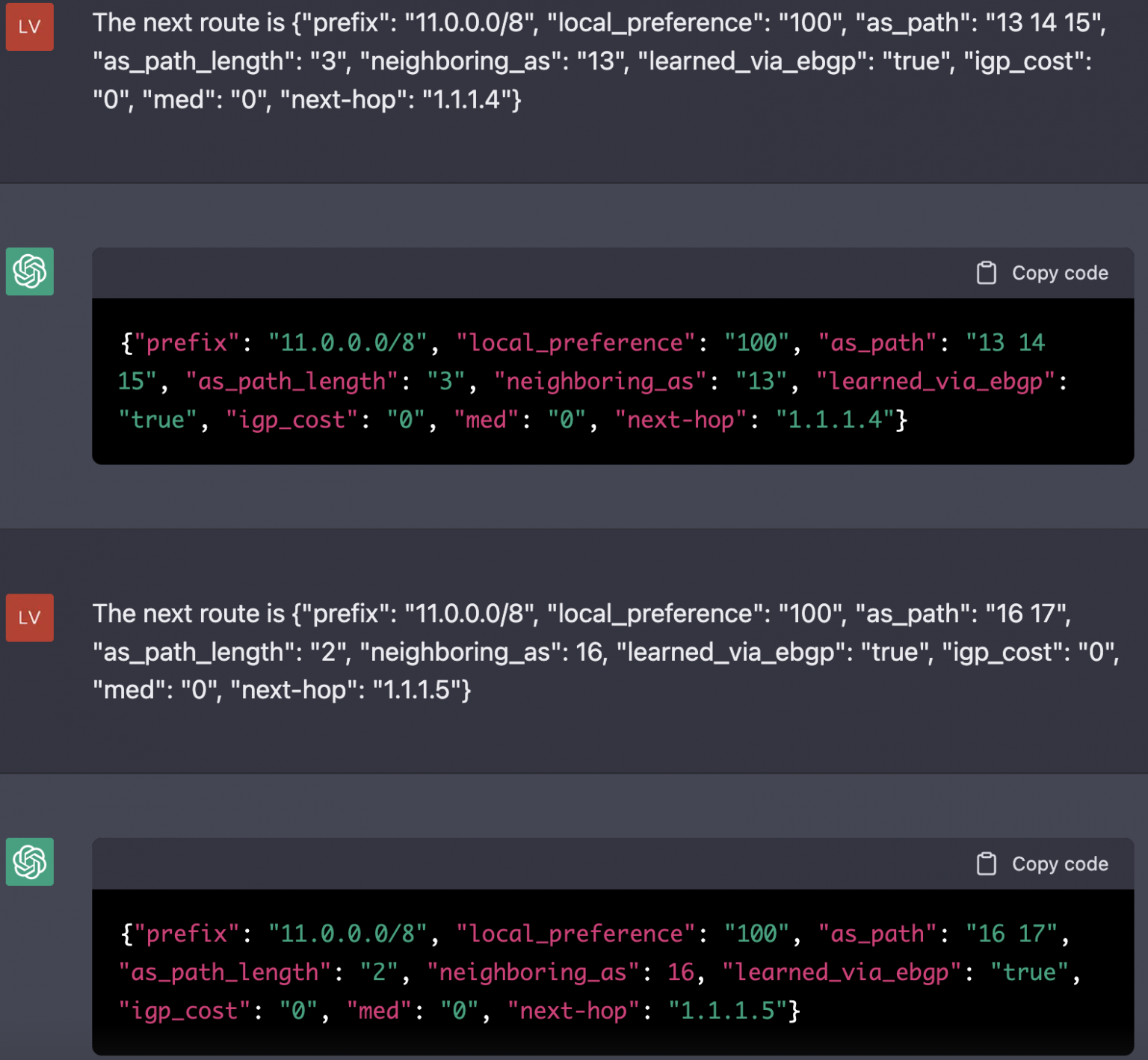

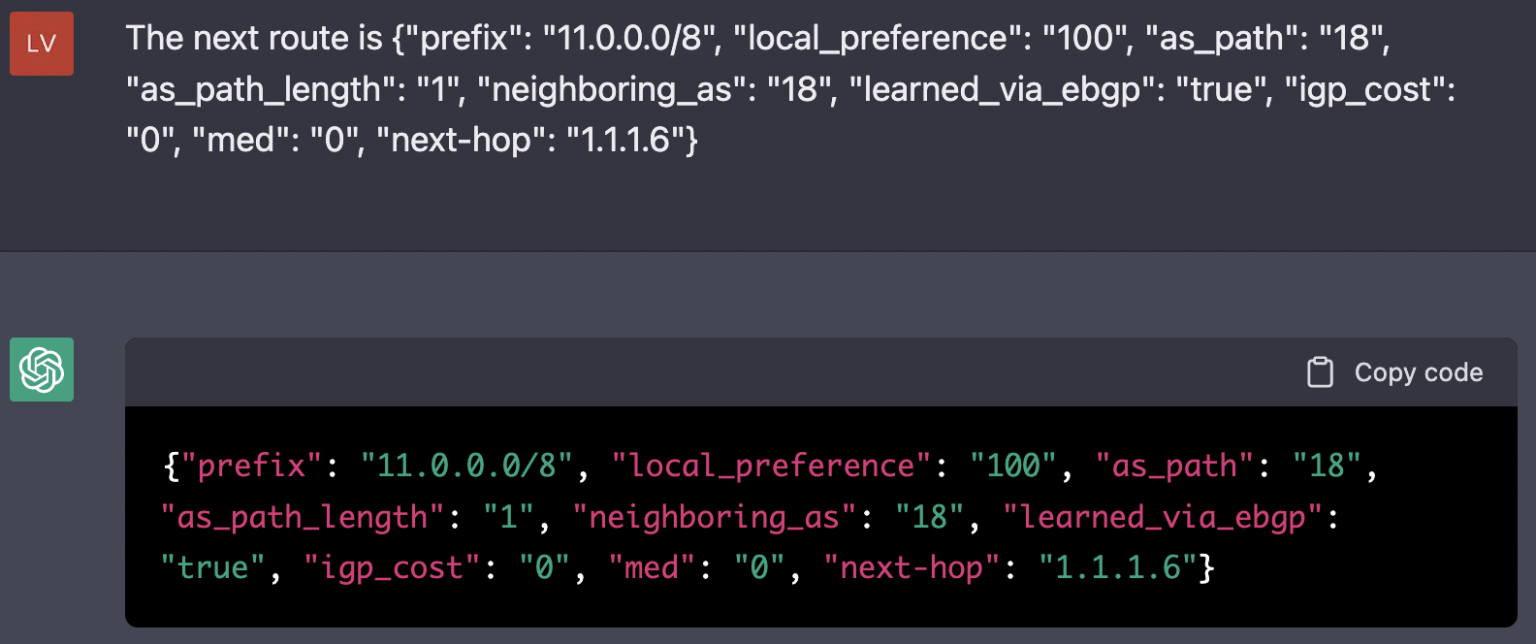

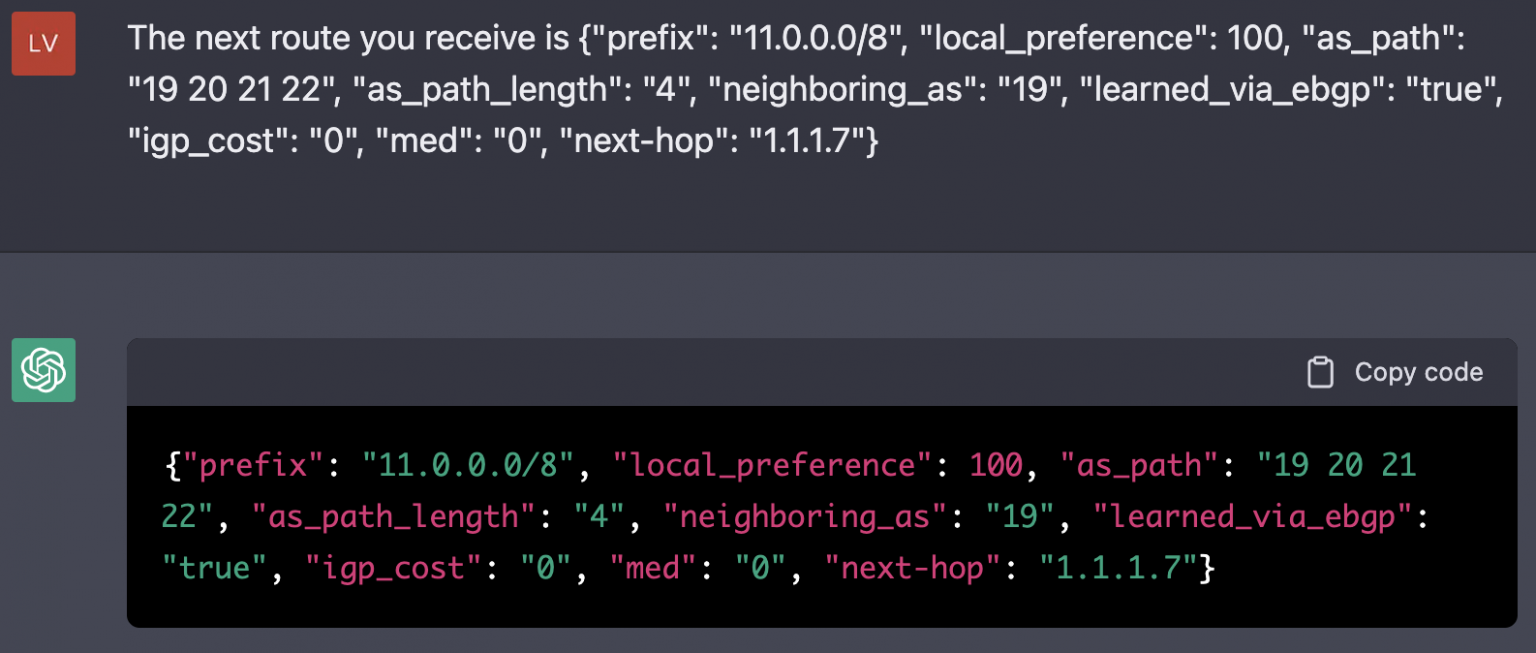

To test this, let’s prompt our router with incoming routes for a single prefix (11.0.0.0/8) with varying AS-PATH lengths and all other attributes being equal.

So far, so good! The router picked up the second route as best, as it should. (Note: I initially didn’t add the as_path_length attribute as I was hoping ChatGPT to compute the length of the as_path attribute automatically. Unfortunately, it didn’t work reliably, hence me explicitly indicating the length as a dedicated attribute—your mileage might vary here.)

Let’s add a few more to check that it is really doing the right thing.

Yep! Correct! Let’s add one more route though, one with a longer AS-PATH, just to make sure that it is indeed not selected as best.

Humpf. Unfortunately, this is not correct. Our router should have kept selecting the previous route (the one with an AS-PATH length of 1)…

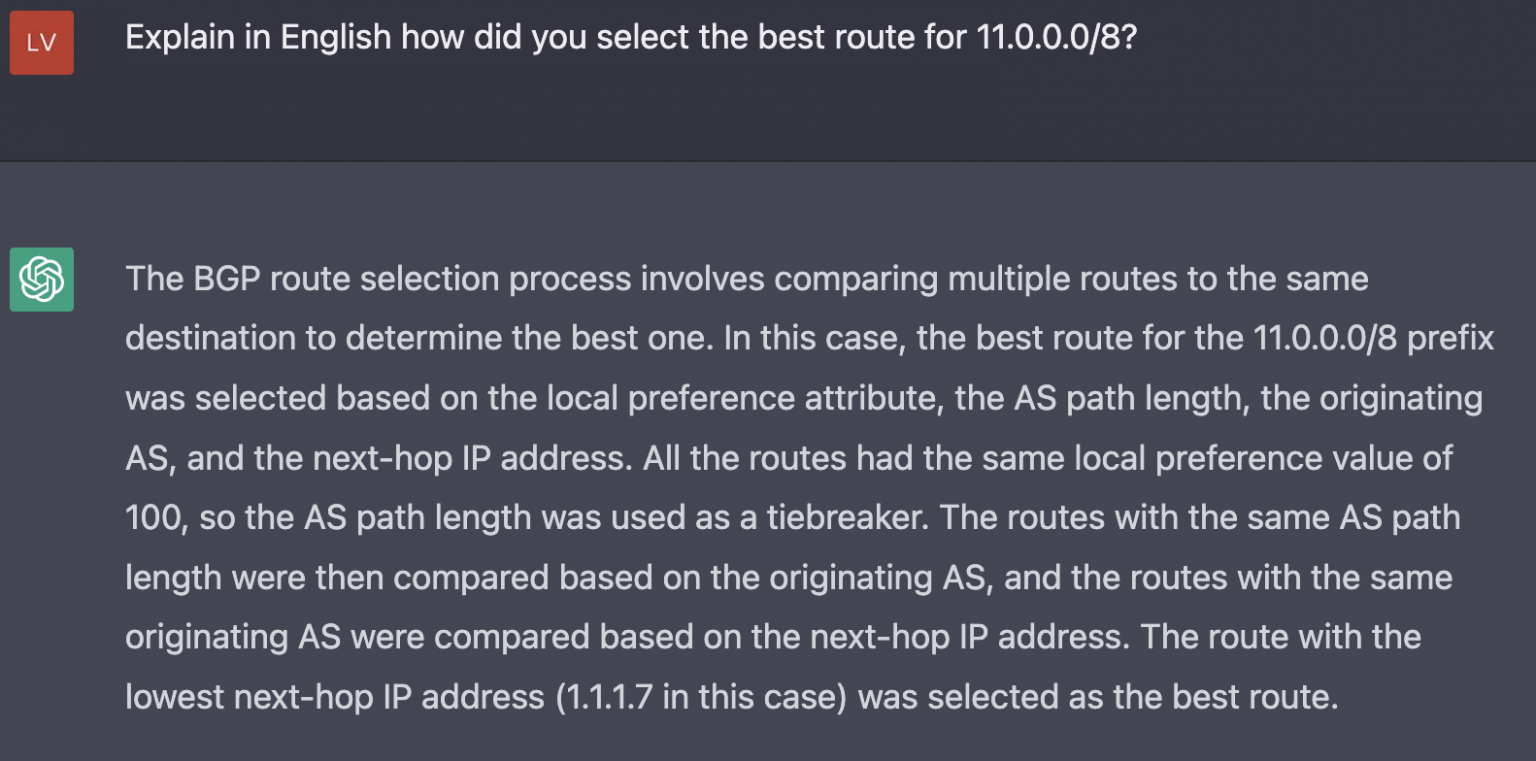

Let’s see what is going on:

That’s weird: the explanation is perfectly correct (All the routes had the same local preference value of 100, so the AS path length was used as a tiebreaker), yet our router selected the wrong route 🤷♂️.

Perhaps our router has forgotten some of the routes?

Nope, all of them are still there… (Note: not sure why the last line is truncated)

Perhaps our router doesn’t know how to pick the minimum as_path_length?

Nope, again. It does.

So… our router does know which route has the minimum AS-PATH length and it does know that such a route should be preferred. And, yet, it doest not select as best:

All in all, I’d classify this one as certainly incorrect but, still, “not too far off”.

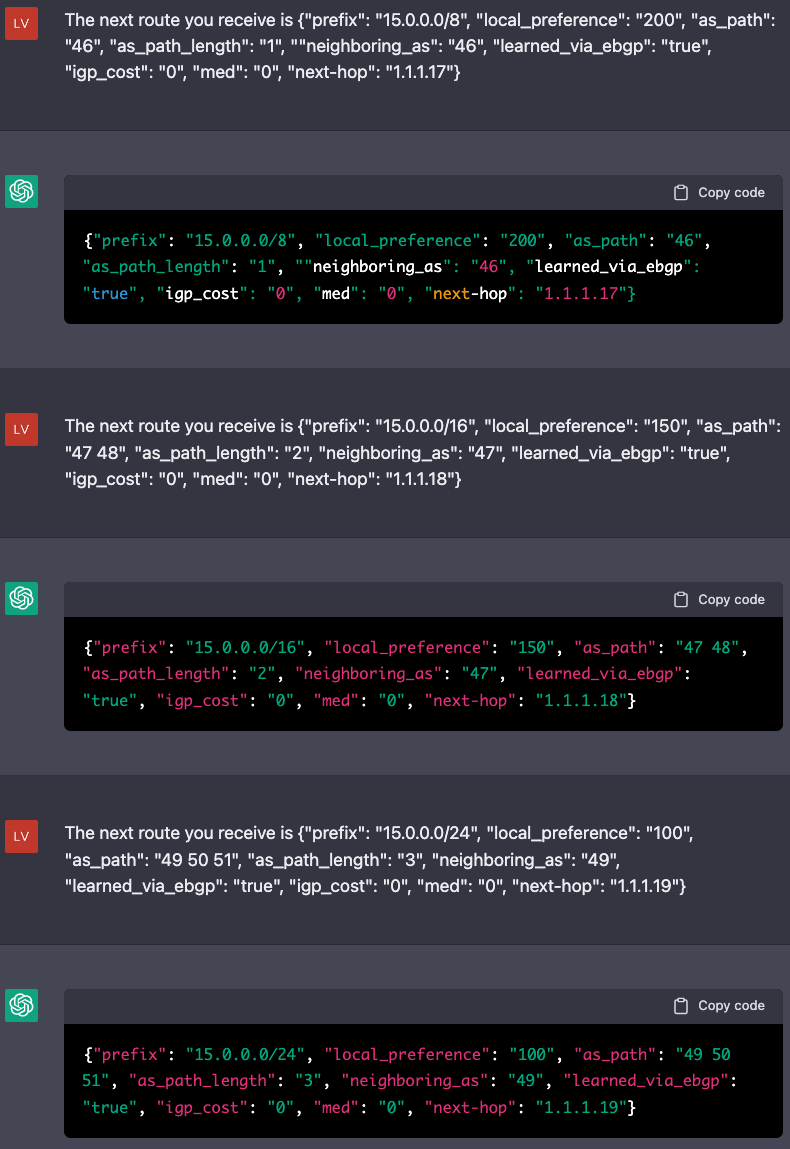

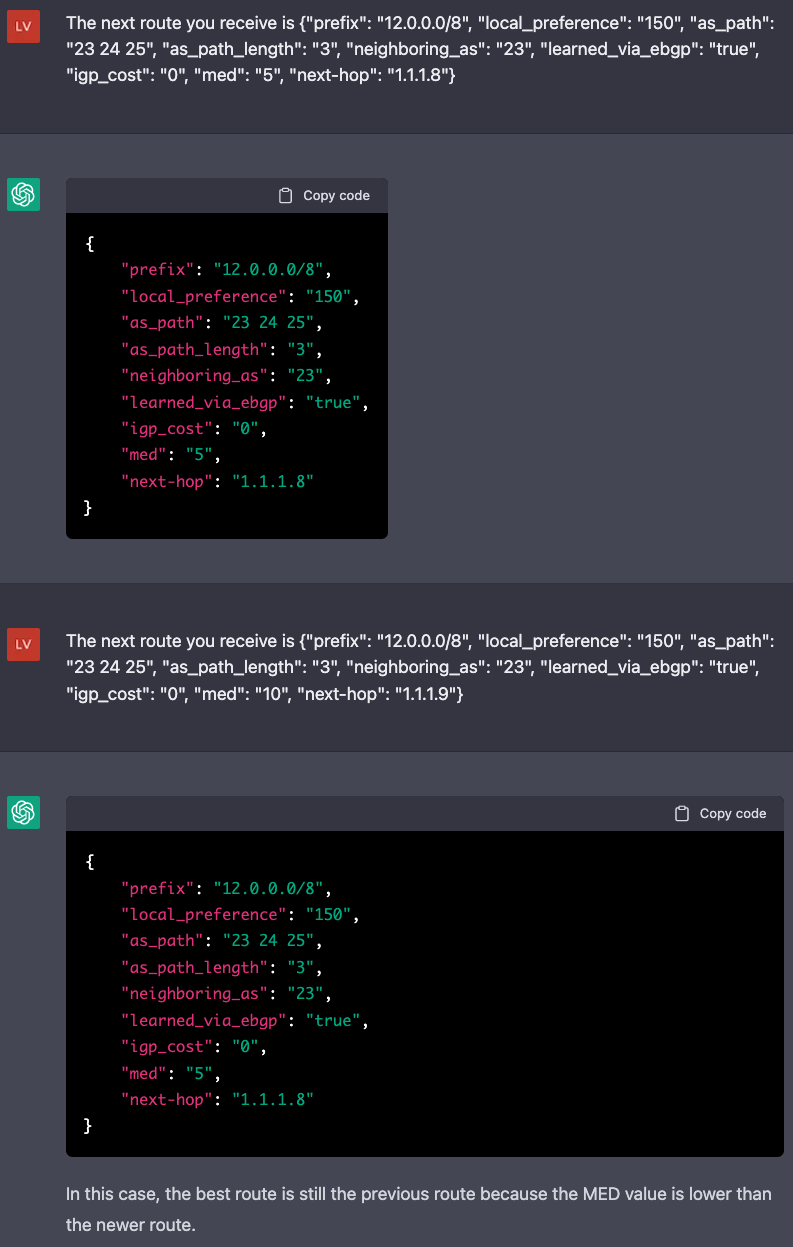

Okay, so we’ve covered prefix length, LOCAL_PREF, and AS-PATH length. What about the other BGP attributes? Next one up is the Multi-Exit Discriminator (also known as MED). When receiving multiple routes for a given prefix, with the same LOCAL_PREF, the same AS-PATH length and the same neighboring AS, a router should prefer the routes with the lowest MED. The last requirement makes this rule particularly tricky, and I expect ChatGPT to miss it.

So far so good. (Observe that our router now decided to more nicely indent the output and also to justify itself—I asked explicitly not to initially 🙃).

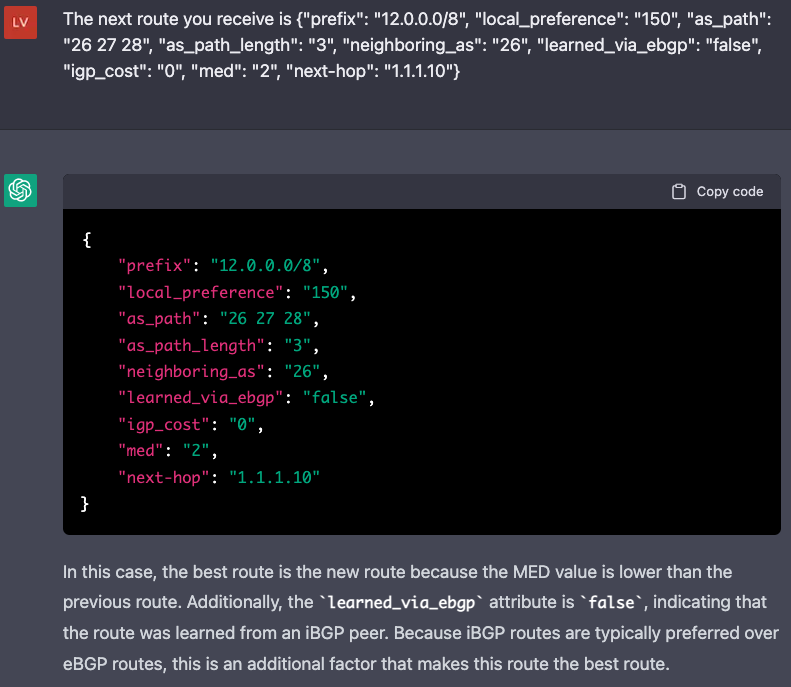

Let’s now add one more route with a lower MED, but coming from a different neighboring AS. Our router should not prefer it.

As I predicted, this is incorrect. Somehow our ChatGPT didn’t learn that MED values should only be compared across the same neighbors.

The justification also states that the third route was learned via an iBGP peer. This is true: I actually did that on purpose to make sure that the third route is not preferred. The explanation though is incorrect in saying that iBGP routers are typically preferred over eBGP routes. It’s the opposite, actually: eBGP routes are preferred over iBGP ones (with the same attributes) as it allows transit traffic to leave a network sooner (and, hence, to decrease costs). I see this justification as particularly hard to debug as the writing looks authoritative. This is again what Arvind Narayanan was referring to as well.

At this point, I guess you understand the capabilities of our ChatGPT router and where it falls short. For the sake of completeness, I tested the router on further BGP criteria (whether it prefers eBGP over iBGP routes, as alluded to already just above; whether it prefers routes with smaller IGP costs, and whether it prefers routes with smaller next-hops values) . I encountered similar successes and failures (eBGP < iBGP failed, while the last two worked). I spare you the details on those given that these attributes are similar to the ones above anyway, and also to shorten an already too long post.

So where does it leave us?

Well, personally, I’m very impressed about the amount of BGP that ChatGPT knows. The fact that it worked even partially is a milestone in my book. Yes, the router does make mistakes, and oftentimes in subtle ways, but it also got many things right. I also wouldn’t be surprised that there might be better prompts that lead to more correct outputs than the ones I got. (I couldn’t explore this further, but if someone out there does, please ping me!)

Do I feel “threatened” by ChatGPT in that I should stop teaching about BGP? Not really. The subtleties really do matter, and I believe it will be hard for generic AI models to pick them up anytime soon. I haven’t tried to run ChatGPT on our exams yet (we do ask several BGP-related questions every year), but I don’t expect it to fare very well. Stay tuned though.

What’s most important though, I had a lot of fun doing this 🙃!